In this article review I will be talking about the article “Classifying Social Media Bots as Malicious or Benign Using Semi-Supervised Machine Learning”, written and published by Innocent Mbona and Jan H P Eloff in the year 2023. I will be talking about how the article relates to the principles of social sciences, the study’s research questions, the types of research methods used and the types of data and analysis done, and the article’s overall contributions to society.

The topic of this article relates to the principles of social sciences because the authors explain that they would first need to study the behaviors of bots and compare them to actual people. Luckily a tool like this has already been designed by Ferrara et al. and it is called the Botometer. The researchers of this article then use this tool to try and expand it to being able to recognize the difference between benign and malicious bots. The study’s research question is whether or not they could use semi-supervised machine learning to identify not only the difference between a bot and human but also the difference between benign and malicious bots. In this article archival research was used and the researchers used different types of datasets, consisting of metadata of benign and malicious Twitter bots (Jan H P Eloff et al.). They then used feature selections (FS) using: Benford’s Law (BL), Ensemble Random Forest (ERF), False Positive Rate (FPR), False Discovery Rate (FDR), and Familywise Error (FWE).

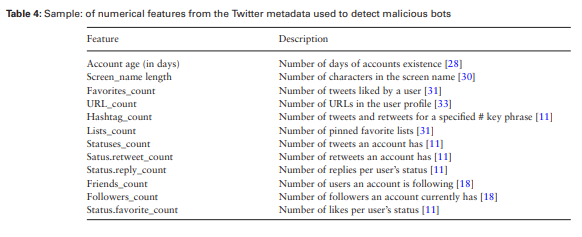

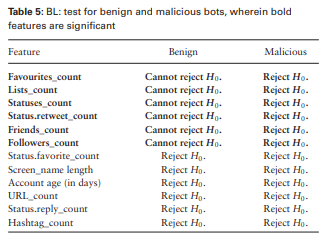

For BL Table 4 (shown below) indicates features that were used in the past to differentiate between a human and malicious bot while Table 5(Shown below) compares the Bl distribution with the actual distribution of features (Jan H P Eloff et al.). The features in Table 5 in bold are significant to identify which bots are benign and which aren’t.

For ERF the study found that status_count, followers_count, friends_count, listed_count, favourite_count, and retweet_count are important to differentiate between benign and malicious bots (Jan H P Eloff et al.). While BL was found to produce similar results the authors said that BL was more computationally efficient.

The FPR method selects significant features based on a P-value computed using chi-squared or analysis of variance and produces an alpha of 0.05 with FDR producing the same result. While FWE also produces the same alpha as FPR and FDR, FWE identifies significant features using the Bonferroni algorithm and an upper bound alpha.

Identifying which bots are benign and which are malicious can also help marginalized groups. It can help stop attacks on them by preventing the spread of false news through malicious bots. If we could identify such malicious bots and ban them from the platform before they can spread the fake news, for example that a group of people were the cause of a terrorist attack even though they weren’t less people would suffer from malicious attacks.

This article also relates to some topics in class but the one that sticks out the most to me is the fact that again people are the reason why these malicious bots are so harmful. With the lack of knowledge some people have in cybersecurity they will click on a link sent to them by a malicious bot causing them to be hacked by an outside source. Many people will also believe everything they see online so if they see fake news that has been spread by a malicious bot this could cause them to harm others because of their lack in knowledge of cybersecurity and the lack of ability to think skeptically about the news. If people were correctly informed on these types of bots they could potentially see the red flags and might prevent them from believing everything they see or clicking on every link they receive.

The overall contributions of the study to society are that there will be significantly less amount of malicious bots which in turn prevents the spread of false news and spam. This will prevent people from believing harmful messages that attack different groups and will ensure that only real news is sent out on the platform by bots.. This will also prevent people getting scammed by bots that send them random links, which hack the victim when clicked on.

Article Link: https://academic.oup.com/cybersecurity/article/9/1/tyac015/6972135