Cybersecurity Internship

This course allows students to volunteer to work in an agency related to cybersecurity. Students must volunteer for 50 hours per course credit and complete course assignments.

For my internship for the summer, I took an internship as a data analysis for Passer American located in Chesapeake, Virginia. My first week consisted of me completing a drug test for the company. I also had to complete training videos. These were a slow process to complete. After this, I was tasked to watch videos to get me familiar with the applications that the company uses. This consist of things like Jira, Confluence, and Microsoft Office. Jira and Confluence are collaborative tools that help the company organize different issues or task that need to be performed. They can be assigned to a certain person, or a group, and can be updated to show completion. A big thing in their Microsoft Office that I was learning how to use is Power Automate. This is a tool that can assign automated task to link different tools together.

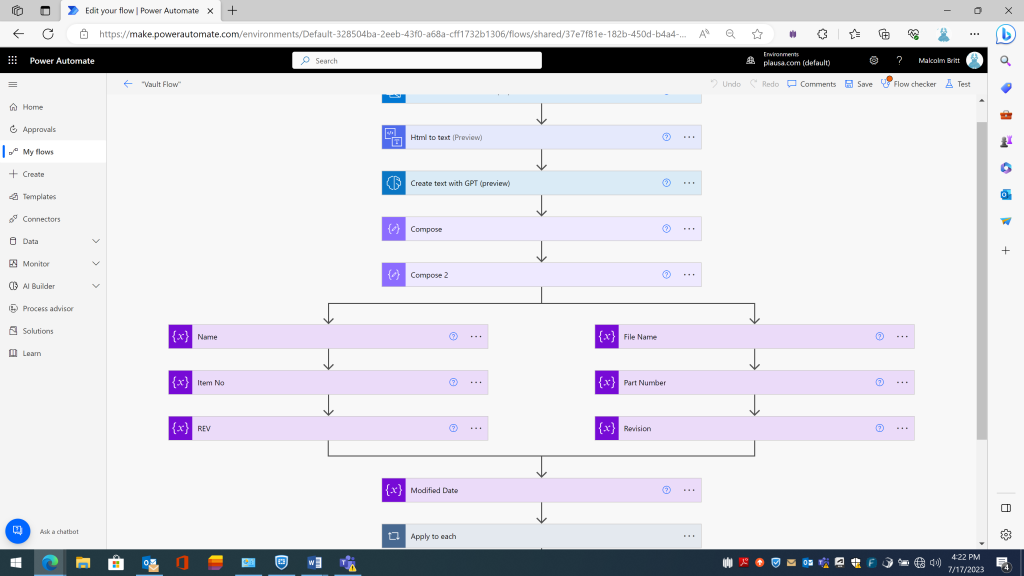

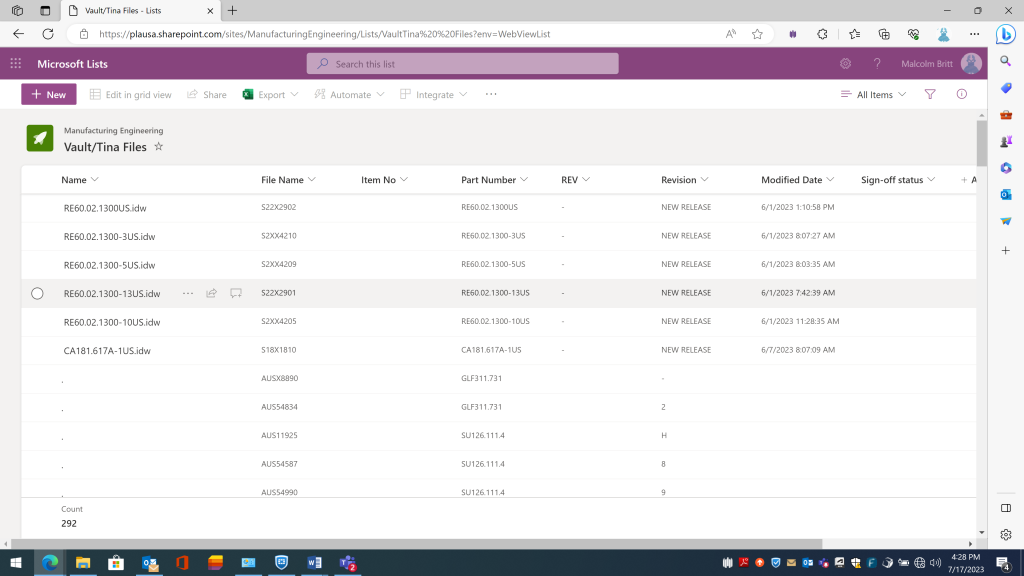

For my first mini project, I was given a run down of their central problem. They have a list of information about files that need to be organized in a list called a BOM. This information comes to them from various emails, and from different places. They want to be able to link them certain places to make it more organized. That is where I came in. the first flow I created extracted key information from files sent from an address called “vault.” The flow then splits the imputes that are pulled from the email. I then created 7 variables for this flow. After this, I had an apply to each loop to sort each output from the email to the variables, such as the name or part number of the file. After this I then created another variable that will count the number of files. I then had another apply to each loop that organized the information into an excel sheet, and a list in SharePoint. This allows access for the required coworkers to view when new issues are added. After many tests, I got my flow up and running successfully.

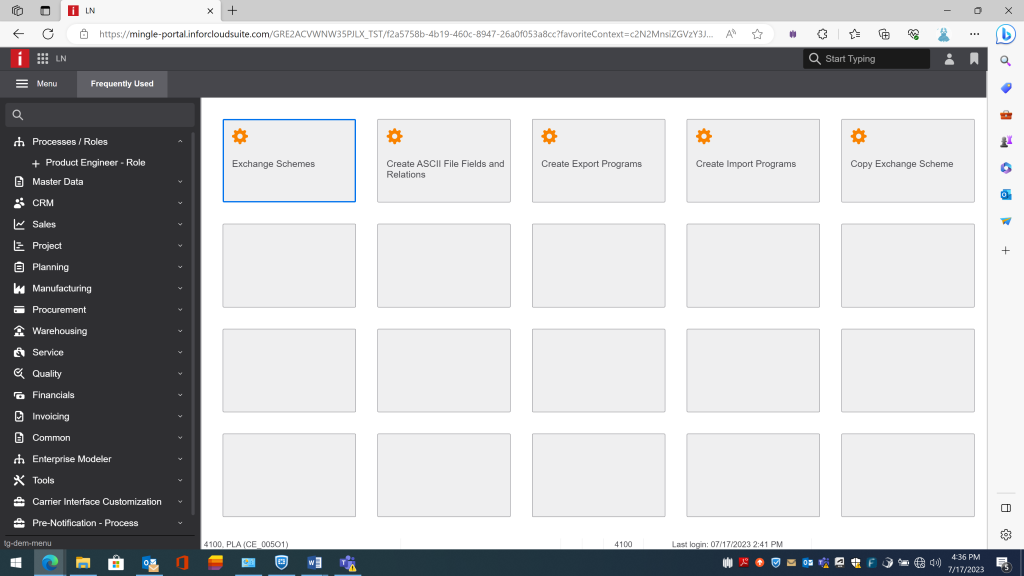

For the next 50 hours at my internship, I spent it testing the flow I made before. The flow was working well, and I used this flow to make a similar one in Power Automate. This flow would also populate to a SharePoint list just like the previous flow that I created, but it was much easier to extract the information from the email. The problem that I was running into is that the send of these emails sends different types of emails. I had to put a filter in the flow for when the email is received to look for a certain string in the header of the email. This allowed the flow to only run for these emails. The flows were working great and populating the orders for the rest of the department to see them and assign them in SharePoint. Along with this, I have started to create a flow that works with emails from one of their work applications, Confluence. This flow was simple, as it just involved the date, serial number, and who the customer was. I spent hours testing the flow and got it working very well. I am waiting to be added to the email distribution for the flow to work automatically, but that is taking some time. The next task my supervisor and I are trying to tackle is the export and import of data from tables in Infor LN. this is another system that Plasser American uses for their data. The problem that they have been having is that there is not an automatic import or export of certain tables that they need (it is being a force export). My job has been to do research on the exchange schemes that are available for LN. After I finished my research, I complied with the required sessions that the company needs for the imports and exports. The next thing that I needed was access to their test environment to begin learning the environment and testing out the exchanges.

For the next 50 hours at my internship, I went back to fixing one of the flows I made with the confluence notifications. The problem with their confluence stuff was that a lot of their information was confusing when it came into emails. I used a similar approach to the other flows. When the email comes in, it would translate it to plain text. I then wrote the prompt for the AI. Before I did this, I ran into a problem. For these emails, they are extremely long. The AI does not have the capacity to extract information from text that is too large, and these emails were past the capacity. To fix this, I split the email output in power auto mate by making a compose with the input of substring(variables(‘EmailBody’), 1, div(length(variables(‘EmailBody’)), 2)).

This then allowed the AI to extract the information I needed. I told the AI to extract the date, work order specification, and the customer’s name. I then had the variables of Date, Serial Number (which was the work order) and Client (customer name). I then used the same application to each format for sorting the names into the variable arrays, and having it placed into a Share Point list. These were on another list than the previous two flows.

After this, I began to do research on an exchange scheme for them to use in LN using SQL. I gathered the different programs that I needed to be able to create different exports and imports for them in LN. The problem that they were having was that there was not a way, automatically at least, that different tables of information were being updated or exported from LN to other places that they needed it. This made having the exchange schemes especially important. For this, I created a Jira ticket with the list of information, such as the paths and table relations that I need to complete this. I then did my last day, as I am beginning fall camp soon.