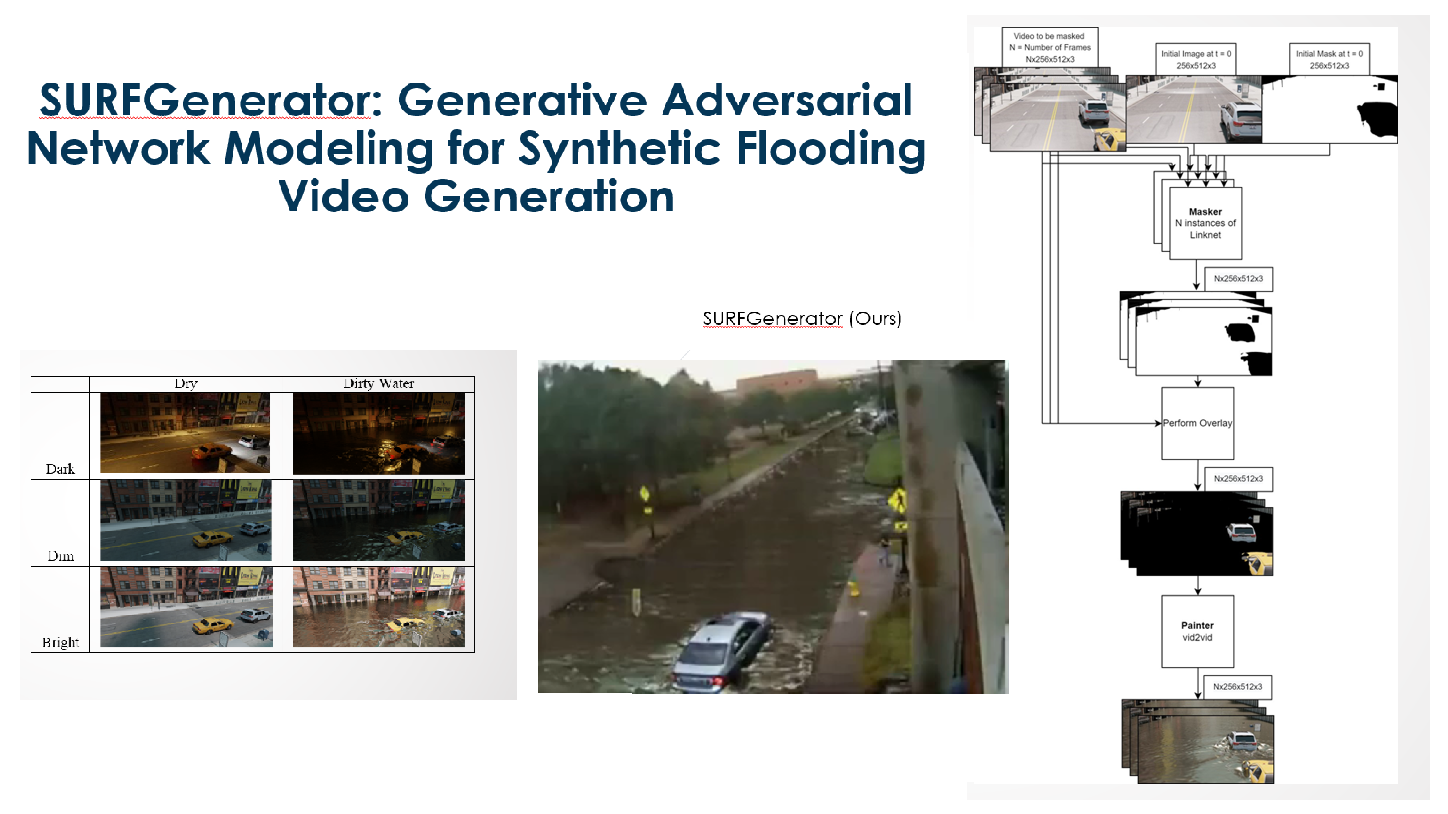

The current lack of labeled large-scale flooding video datasets hinders work in semantic segmentation of flooding videos. Flooding video semantic segmentation is needed in many critical application areas, including recurrent flooding analysis due to sea-level rise and climate change. To address the lack of labeled flooding videos, this work proposes a novel generative adversarial network (GAN)-based Synthetic Urban Recurrent Flooding (SURF) Generator (SURFGenerator) for generating synthetic videos of flooding at different water depth levels. We first generate large-scale training samples of synthetically rendered videos of flooding information using physics-based water simulation tools within Blender and Mantaflow for our model. A two-part model is then developed, inspired by previous work, composed of a semantic segmentation (masker) network using LinkNet followed by an off-the-shelf vid2vid painter network. The masker inference network is trained with rendered images and corresponding binary egmentation masks to identify potential flooding areas for a specific depth level in the video. Finally, the painter network is trained separately using segmented render videos as input. The painter network takes the masks and initial video and generates synthetic water in the masked areas to create an output flooding video. To the best of our knowledge, for the first time in literature, this work generates synthetic flooding videos with physically relevant features such as moving ripples and small waves when cars move on the flooded streets. These synthetically generated realistic flooding videos may be used to generate large-scale labeled images for semantic segmentation and water depth estimation on flooded streets and other urban settings using deep network models. We make our code and data freely available at https://github.com/visionlabodu/SURFGenerator.

Vision Lab

Computational Intelligence and Machine Vision Laboratory